Artificial intelligence could be the defining technology of our time. Texas Engineers are hard at work refining and improving the technology, imagining new ways to deploy AI to solve important problems and putting up guardrails to protect users — and the technology itself.

By Nat Levy

The Cockrell School of Engineering at The University of Texas is at the forefront of a transformative journey, exploring the ethical dimensions of artificial intelligence (AI). In this feature, we uncover the school's pioneering initiatives, research, and individuals dedicated to shaping the ethical future of AI. Join us as we navigate this evolving landscape and witness the profound impact of AI on society, along with the innovative solutions that are shaping our technological future.

What you see above was written by ChatGPT, a program that in just over a year has become the face of modern artificial intelligence and the latest flashpoint in the debate over technology and society. This was a scientific experiment, so to our student readers, don’t try this at home!

For the uninitiated, ChatGPT works by entering a command, in this case write a magazine-style introduction about artificial intelligence at The University of Texas at Austin.

It will quickly generate text, and there’s an opportunity to refine your ask. In my case, I gave it several follow up prompts like make it shorter,

before getting the above result.

ChatGPT, and other programs like it that instantly generate words and images, brought AI to the masses over the last year, after decades of popping up in smaller applications. This moment represents the culmination of more than half a century of work by scientists and engineers to turn the sci-fi technology into a reality.

Texas Engineers are at the forefront of this AI revolution. They are rewriting the algorithms that power AI to make them smarter, remove biases and ensure reliability. And they’re finding new and exciting ways to deploy the technology to help people and improve processes.

We’ve reached a critical point with AI — the tools are evolving at a rapid pace, and they will have huge impacts in many people’s daily lives. People are rightly worried about it as well. That’s why it’s important to find the right applications and ensure that it is helping rather than hurting humanity,

said Alex Dimakis, a professor in the Cockrell School’s Chandra Family of Electrical and Computer Engineering and a leader of several AI initiatives at The University of Texas at Austin.

The Year of AI

At UT, 2024 is the Year of AI.

The university will prioritize AI research and education, display its achievements at major events like like SXSW, establish new partnerships with industry and government agencies and create more opportunities for collaborations across and beyond UT Austin.

Kicking off the Year of AI, UT just announced the establishment of the new Center for Generative AI. This center, led by Dimakis with collaborators across the Forty Acres, will create one of the most powerful academic AI computing clusters in the world at UT.

The center will feature a 600 NVIDIA H100s GPUs — short for graphics processing units, which are specialized devices to enable rapid mathematical computations, making them ideal for training AI models. The Texas Advanced Computing Center (TACC) will host and support the computing cluster, called Vista, that will be among the largest of its kind.

With a core focus on biosciences, health care, computer vision and natural language processing (NLP), the new center will be housed within UT’s interdisciplinary Machine Learning Laboratory and co-led by the Cockrell School of Engineering and the College of Natural Sciences. In recognition of AI’s growth across industries, it also includes faculty members and support from Dell Medical School, as well as researchers from the School of Information and McCombs School of Business.

Left, Reality: NVIDIA H100 GPU, credit NVIDIA.com VS Right, OpenArt AI generation, using prompt: Create a spiral of hundreds NVIDIA H100 GPU chips, use perspective to make it seem like a repeating pattern.

In addition to the center, the University is already home to the National Science Foundation-supported AI Institute for Foundations of Machine Learning (IFML) and three of the 10 fastest university supercomputers in the world. UT’s AI computing capabilities are virtually unmatched.

Artificial intelligence is fundamentally changing our world, and this investment comes at the right time to help UT shape the future through our teaching and research,

said President Jay Hartzell. World-class computing power combined with our breadth of AI research expertise will uniquely position UT to speed advances in health care, drug development, materials and other industries that could have a profound impact on people and society. We have designated 2024 as the Year of AI at UT, and a big reason why is the combination of the trends and opportunities across society, our talented people and strengths as a university, and now, our significant investment in the Center for Generative AI.

AI at the Cockrell School

UT’s work in AI dates back to the 1960s when Woody Bledsoe, who developed the first version of facial recognition technology, and Bob Simmons, an innovator in natural language processing, joined the faculty. They stayed at UT for the remainder of their careers and jumpstarted AI research on the Forty Acres.

Today, there are more than 150 UT researchers spanning 30 disciplines and nearly all colleges and school. Texas Engineers from all academic departments are infusing AI into their research. That includes innovations in the core technology, new applications and safeguards.

Dimakis’ current work focuses primarily on image recognition/reconstruction. He was inspired by the white Obama

controversy of 2020, where an algorithm designed to make clear images out of pixelated photos turned the first Black president into a white man.

The Famous White Obama

image, created when image recognition software attempted to unscramble a pixelized photo of President Barack Obama. “Ours” represents software created by Dimakis and his team. CREDIT: IFML

Left, Reality: Photo of Alex Dimakis taken by staff photographer VS Right, OpenArt AI generation, using the following prompt:Portrait of an engineer studying AI using image provided as subject, realistic digital painting, futuristic AI lab in the background, detailed facial features, high-tech AI equipment, professional attire, intense and focused expression, high quality, realistic, futuristic, detailed eyes, modern technology, futuristic setting, professional, atmospheric lighting.

His research showed that existing algorithms amplify biases that exist in the datasets. There’s a key distinction that can lead to inaccuracy, when the algorithm wants to get the answer 100% right, rather than have a reasonable answer. It pulls not from a representative data sample, but instead tries to turn everything into the majority class, because this has the highest probability of being correct. That’s how you get a series of pixelated photos of people of different races, all turned white by the algorithm.

“The algorithm is so obsessed with winning rather than providing a plausible answer that it amplifies biases in pursuit of total accuracy.”

— Alex Dimakis, professor, Chandra Family Department of Electrical and Computer Engineering

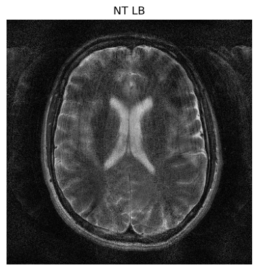

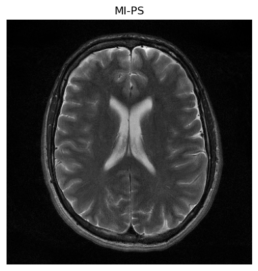

Dimakis isn’t the only Texas Engineer focused on improving the algorithms that power AI. Jon Tamir, assistant professor of electrical and computer engineering, is applying the technology to magnetic resonance imaging (MRI). These non-invasive scans require the patient to lay still in a scanner for long periods of time to produce a diagnostic image for clinicians to work from.

But some patients, like young children, can’t sit still for that long. That leads to an imperfect image that can be hard to interpret.

Tamir’s work focuses on using AI to speed up the scan by filling in the blanks on the MRI image. Since we know what an MRI image should look like, the model can be trained to extrapolate missing data points to provide a clearer picture. The AI model is used harmoniously with the scanner’s known imaging physics to generate clinically reliable results.

An MRI scan conducted 2.5x faster than standard clinical scans with patient advised to move around. Left: Image result using standard construction technique. Right: Image result using AI algorithm.

Left, Reality: Jon Tamir Photo taken by staff photographer VS Right, OpenArt AI generated image using staff photo and prompt.

The ability to accurately generate data to fill out an MRI image could have a tremendous impact on the field. It could mitigate noisy

images where the patient can’t sit still and it could reduce the amount of time patients need to spend in the MRI machine.

We are collecting measurements, but we aren’t collecting all the measurements,

Tamir said. We want to strike the right balance between AI and the imaging physics to help fill in missing data that is both consistent with the measurements we collect and what we know images that come from an MRI scanner should look like.

Filling in the blanks is an important part of what of AI can do, but so too is decision making. That is Amy Zhang’s focus. The assistant professor of electrical and computer engineering concentrates on the concept of reinforcement learning, which focuses on tasks with a reward without a clear path to get there, such as playing games like chess.

Zhang wants to apply reinforcement learning techniques to larger subjects like autonomous vehicles and robotics.

This can be a tool to improve efficiency and safety, and help find new strategies and solutions that lead to more efficient energy use or are safer to nearby humans,

Zhang said.

AI has a critical role to play in the energy sector. For the last four years Michael Pyrcz has co-led the Energy AI Hackathon at UT with colleague John Foster. Though the event is led by the Hildebrand Department of Petroleum and Geosystems Engineering and Jackson School of Geosciences’ Department of Planetary and Earth Sciences, it is open to everyone at UT.

When you think of AI, petroleum may not be the first thing that comes to mind. Pyrcz, however, says that engineers dealing with the subsurface are the original data scientists.

Drilling and mining for resources underground is high stakes. Even the slightest miss can cost millions of dollars. Over several decades, data-driven subsurface modeling has become an important part of that industry, long before this technology showed up in other fields.

Accessibility is the name of the game for Pyrcz, who broadcasts his lectures on YouTube. The AI Hackathon starts with an extensive workshop designed to teach some fundamental AI, machine learning and programming concepts to make the event inviting for students with little to no background in these areas. Then, they are given a problem — last year it involved predicting which underground pump out of 20+ pipes in an area would fail first, using features such as temperature changes and pressure.

Michael Pyrcz

“We want students, when they go out to the energy industry or any other industry, to be ready and comfortable using machine learning technologies. It means making it accessible. For us it’s about building and supporting a community to be able to work together on these problems, and it’s open to anyone.”

— Michael Pyrcz, professor, Hildebrand Department of Petroleum and Geosystems Engineering

Pyrcz leads the Energy AI Hackathon at UT, which is open to all students. Above, scenes from the 2023 hackathon.

Pyrcz isn’t the only faculty member taking a community-based approach to AI. Krishna Kumar, assistant professor in the Fariborz Maseeh Department of Civil, Architectural and Environmental Engineering, recently received a $7 million grant from the National Science Foundation to integrate AI into civil engineering through a new community at UT called Chishiki-AI.

This community will bridge the gap between civil engineers, AI experts and cyberinfrastructure professionals to protect our most vital physical and virtual infrastructure. The goal is to infuse AI into the fabric of infrastructure design and maintenance. By employing AI to accelerate simulations and enhance structural health monitoring, this project intends to effectively use the extensive data produced in civil engineering to strengthen infrastructure and ensure a sustainable future.

We have so much information about natural hazards, for example, and ideally we should be able to extract new science, find out new engineering designs to make the world a safer place,

said Kumar. But we haven’t been able to leverage that. By developing novel AI algorithms to solve unique civil engineering problems, we can pioneer new ways to make the world safer and more efficient.

Modeling of AI-assisted design of barriers to minimize landslide runout.

Left, Reality: Krishna Kumar Photo taken by staff photographer VS Right, OpenArt AI generated image using staff photo and prompt.

Why Now?

The idea of artificial intelligence actually predates computers, which were invented primarily to mimic the human brain.

These types of brain-like machines, called neural networks, date back to the 1940s. Following a period of innovation in the 1950s and 1960s, they fell out of favor as the computing power and available data wasn’t up to snuff to make neural networks work well. That kicked off what some experts refer to as the first AI Winter.

In the last decade that has changed drastically. The flashpoint came in 2012 when veteran computer scientist and faculty member at the University of Toronto Geoff Hinton, and his students Alex Krizhevsky and Ilya Sutskever, deployed a neural network they created for the ImageNet challenge, an image recognition competition. Their neural network massively outperformed every other program in the competition. Google quickly scooped up the network and its creators — rebranding the technology as deep learning.

There’s a direct line from that moment to the rise of programs like ChatGPT. The renewed success of neural networks represented the kickoff of the modern AI revolution, and it lit a fire under Dimakis and his fellow AI researchers to dive deeper into these rebranded neural networks that now serve as the backbone for many modern AI programs.

Many different technologies fall under the broad banner of AI — machine learning, computer vision, autonomous systems and much more. They all share a common trait — the ability for a machine to learn from existing data to make predictions about the future and figure out open-ended problems.

There’s been a lot of hype, but there has also been many real scientific breakthroughs in recent years,

Dimakis said. The broader public realized that in November 2022 with ChatGPT, but this is a revolution that has been clear to people working in this space for about a decade. Currently, new breakthroughs are happening every few months. It’s incredible, but also hard to keep up.

AI Guardrails

Generative AI has shown the ability to create, quickly and prolifically. With that creativity has come instances of fabrication. One well-known example is the case of the ChatGPT-generated legal brief that made up prior cases.

And in Zhang’s world of reinforcement learning, AI has shown a propensity to exploit any bugs in a game or system to reach its reward, cheating in essence. Though if the goal is to be human-like, that seems pretty spot on.

Several researchers repeated the phrase garbage in, garbage out,

a popular reference in the tech world that means flawed data leads to a flawed outcome. However, in the case of AI, the programs that interpret the data are also part of that equation. And an AI algorithm not constrained by reality or facts is not going to produce a reliable outcome.

The best way to protect against these challenges is to have a true understanding of how the AI model works, and why it comes to the decision it does. That makes it easier to essentially fact-check its predictions and evaluate outcomes.

In an earthquake scenario or natural disaster scenario, it can be very risky when you’re predicting where to send first responders and allocate resources,

said Kumar. You’re putting people’s lives on the line, and it would scare me if an AI model that I can’t understand or explain is being used to make those decisions.

Across disciplines, there is a sense that knowledge is power when it comes to managing AI. Zhang believes the best way to integrate AI into society is through an extensive public education campaign. People are scared of things they don’t know, and AI features plenty of unknowns.

If people by and large understand what AI systems can and cannot achieve that informs how it is used and the necessary security measures. Zhang sees similarities to how general software security works today — extensive unit testing, general education courses of how to spot catfishing and scams, authentication and more.

Knowing what the system is doing helps each person understand what it’s safe to apply that system to, or take its recommendations with a grain of salt,

Zhang said.

Ongoing AI research has major implications — life or death medical decisions, protecting our most vital infrastructure and more. That’s why one of the big focuses in refining AI is in erecting guardrails to ensure honesty and accuracy.

These guardrails could come in the form of government regulation of AI. In October, President Joe Biden issued an executive order that establishes new standards for AI safety and security, protects Americans’ privacy, advances equity and civil rights, stands up for consumers and workers, promotes innovation and competition, advances American leadership around the world, and more.

Dimakis has played a role in AI regulation. He is part of the U.S. Chamber of Commerce AI Commission, which earlier this year published a report highlighting the promise of the technology, while also calling for a risk-based regulatory framework.

As the co-director of the NSF Institute on the Foundations of Machine Learning, he also presented research in the U.S. Senate through an NSF AI institutes event.

Dimakis and computer science professor and director of the National Science Foundation AI Institute on the Foundations of Machine Learning Adam Klivans present their work at a U.S. Senate event. Courtesy Alex Dimakis.

The process left Dimakis with several key takeaways:

People and companies should always be held liable for AI’s mistakes and they cannot hide behind AI.

If an AI system for screening applicants is hiring in a discriminating way, the companies should be liable, not the AI system.

The development of AI should be an open ecosystem that seeks to attract the best talent from around the world, rather than shutting people out due to geopolitical and immigration concerns.

These major breakthroughs in AI should be open-source and open-data. Dimakis’ biggest concern about AI is that it could shift the balance of power if not available to all.

“If only one or two companies have these systems like ChatGPT that everyone is building on, you have an oligopoly forming and these tools can concentrate a lot of power into few entities. Models cannot be checked for security bugs, privacy leaks and robustness, unless the research community can stress test them. We need open models and open datasets and we are working hard for this at UT Austin.”

— Alex Dimakis, professor, Chandra Family Department of Electrical and Computer Engineering

For Tamir, and his work on medical applications, those regulatory structures are already in place. Approval from the U.S. Food and Drug Administration is a rigorous affair that includes significant clinical testing and external validation.

AI that works for medical purposes should be regulated like a medical device or a new drug,

Tamir said.

Because this technology will lead to medical breakthroughs in the future, it should be treated like any other major medical project under development.

Ultimately, it needs to be demonstrated that these things consistently work prospectively and not just retrospectively before they go out into the real world,

said Tamir. Perhaps other sectors can learn from the medical world, where we must demonstrate through extensive external validation that a new technology is both safe and effective before it goes out to users.

In my first interaction with ChatGPT, the program asserted that AI is becoming integrated into our daily lives.

And the Chamber report predicts AI will become a part of every business in the next 10-20 years.

But it doesn’t need to be everywhere, experts say. Much of the work happening at UT — and in labs around the world — is investigating where AI makes sense as a tool, but also where it doesn’t.

I think what’s going to happen is we are going to settle down and realize how AI can actually work in our lives,

Pyrcz said. And we’re also going to realize its limitations. There have been a lot of technologies over the last 50 years — computing technologies, modeling technologies — that looked wonderful but crashed on the rocks of reality. This is still big waves out in the ocean, let’s see what happens when it comes ashore.

This line of discussion brought me back to my initial inquiry of ChatGPT. It took seven prompts to get to the introduction at the top of the story. The first response was a 500+ word soup that was far from reader-friendly.

It only took a few minutes to come to a passable solution, something that might take a writer like me hours or even days, depending on what else I have going on. But ChatGPT doesn’t have meetings all day, or emails to return. It can follow commands promptly and develop language that, at worst, serves as a good starting point for a draft.

That’s the point — as you feed the program more information, it returns a better result. And it does so instantly.

Still, a human writer armed with thorough research could write a better introduction because they have independently gathered and verified knowledge in a way that AI cannot. However, I left this exercise a little more open-minded than I came in (everything/everyone has their biases). I’m still somewhat concerned that ChatGPT and its contemporaries are coming for me and my profession, but I’m also more willing to accept this technology as a tool that can meaningfully contribute to my work. While plenty of experts insist that AI isn’t going to take our jobs, we can all see that change is on the horizon. And the people who use AI might just leapfrog those who don’t.

With that said, ChatGPT, take us home:

In conclusion, The University of Texas at Austin is not just contributing to the future of AI; it is actively shaping it. From healthcare breakthroughs to autonomous systems and sustainable solutions, the research here is poised to redefine industries and improve lives. The collaborative spirit and relentless pursuit of knowledge underscore UT Austin's commitment to addressing global challenges. As these innovations continue to unfold, the university stands as a beacon of innovation, inspiring a generation and leaving an indelible mark on the ever-evolving tapestry of human achievement. The journey continues, with UT Austin at the forefront of the AI revolution.

As our experts said, the output is only as good as what you put in. So, I’ll be more specific to ChatGPT: try again, but add some balance about the ethical implications: